Traditional Data Centers vs. AI-Ready Data Centers: A New Era of Infrastructure

As the AI boom reshapes industries, data centers are undergoing a profound transformation to meet the growing demands of artificial intelligence (AI) workloads.

Traditional data centers have long provided reliable environments for managing IT infrastructure, but the emergence of AI data centers is revolutionizing the way we approach computing, storage, and network infrastructure.

As AI continues to shape industries worldwide, it’s pushing the boundaries of what data centers can achieve, requiring new approaches to design, infrastructure, power, and resources to handle its high-density needs.

Traditional Data Centers: Tried and Tested

Traditional data centers are built to house servers, storage systems, networking equipment, and cooling infrastructure. These facilities are designed for CPU-based workloads that are predictable, incremental, and less power-intensive.

Key features of traditional data centers include:

- Balanced Cost-Performance: Designed for general-purpose computing with cost-efficiency in mind.

- Fragmented Scalability: Resources can scale as applications grow, but not at the rapid pace required for AI.

- Lower Power Density : Traditional racks consume around 5-10 kW per rack, with air-based cooling systems sufficient for heat rejection.

- Standard Cabling Infrastructure : A “leaf-and-spine” cabling model supports steady network communication without the bandwidth demands of AI workloads.

- Low-power CPU workloads : CPU-based workloads consume significantly less power compared to the power-hungry demands of AI workloads, which require custom solutions for high capacity, on-demand performance, and specialized support.

Traditional data centers simply aren’t equipped to handle the unique demands of AI and accelerated computing workloads.

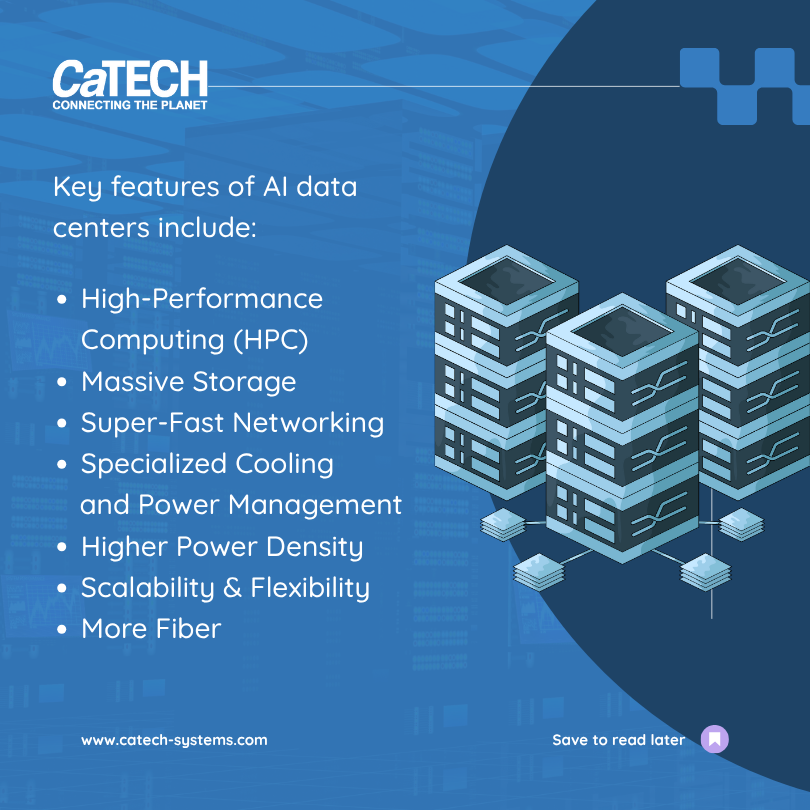

AI Data Centers: Built for the Future

As AI continues to disrupt industries, the demand for specialized AI data centers has grown. These next-generation facilities are custom-built to support the heavy and complex needs of AI workloads.

Unlike their traditional counterparts, these facilities are purpose-built to handle high-performance computing (HPC), ultra-fast networking, and advanced cooling systems.

Key features of AI data centers:

- High-Performance Computing (HPC): Equipped with powerful systems featuring GPUs, FPGAs, and ASICs for lightning-fast data processing and machine learning tasks. AI workloads rely on GPUs, TPUs, and NPUs, which are designed for parallel processing and massive data crunching.

- Massive Storage Capacity: AI applications generate vast amounts of data, requiring storage systems with immense capacity and high throughput. Necessitating hybrid storage solutions with high-speed SSDs and distributed architectures.

- Blazing-Fast Networking: Ultra-fast, low-latency networking technologies, like high-bandwidth Ethernet and InfiniBand, facilitate rapid data transfer between compute nodes and storage systems.

- Specialized Cooling and Power Management: Due to AI hardware’s high-density and power-hungry nature, advanced cooling techniques like liquid or immersion cooling are used to prevent overheating. Liquid cooling, including direct-to-chip and immersion cooling, is essential for managing the heat generated by densely packed GPU racks. There is also a requirement for Intelligent power management ensures optimal energy use.

- Higher Power Density: A I servers require 5-10x more power than traditional systems, often exceeding 40-110 kW per rack.

- Scalability and Flexibility: With AI technology evolving rapidly, AI data centers are designed with modular layouts, containerized deployments, and software-defined infrastructure, enabling quick adaptation to change needs.

- More Fiber: AI servers demand 4-5x more fiber connections, requiring multimode fiber and active optical cables for high-speed, low-latency communication.

To accommodate the dense, data-intensive nature of AI workloads, existing data center hardware and layouts must be revamped:

- Revamped hardware: Servers, switches, cables, and storage need to handle large quantities of real-time data.

- Reconfigured network backbone: Higher bandwidth is essential to ensure efficient communication between GPU racks and storage systems.

- Revised design elements Cooling, power, and cabling systems need to support increased density and interconnectivity.

Why AI workloads require different cabling:

Traditional data centers follow a “leaf-and-spine” model for cabling, which works well for standard workloads. However, AI workloads need a specialized design due to their high data volume and intensive compute requirements.

AI servers rely on high-performance GPUs that require seamless connectivity to function as a single, cohesive system.

This increases the need for:

- High-Density Fiber Connections: Up to 4-5x more than traditional setups.

- Multimode Fiber for Short Distances: Ideal for intra- and inter-rack cabling with speeds up to 400Gbps.

- Active Optical Cables (AOCs): To simplify deployments and support high-speed data transmission.

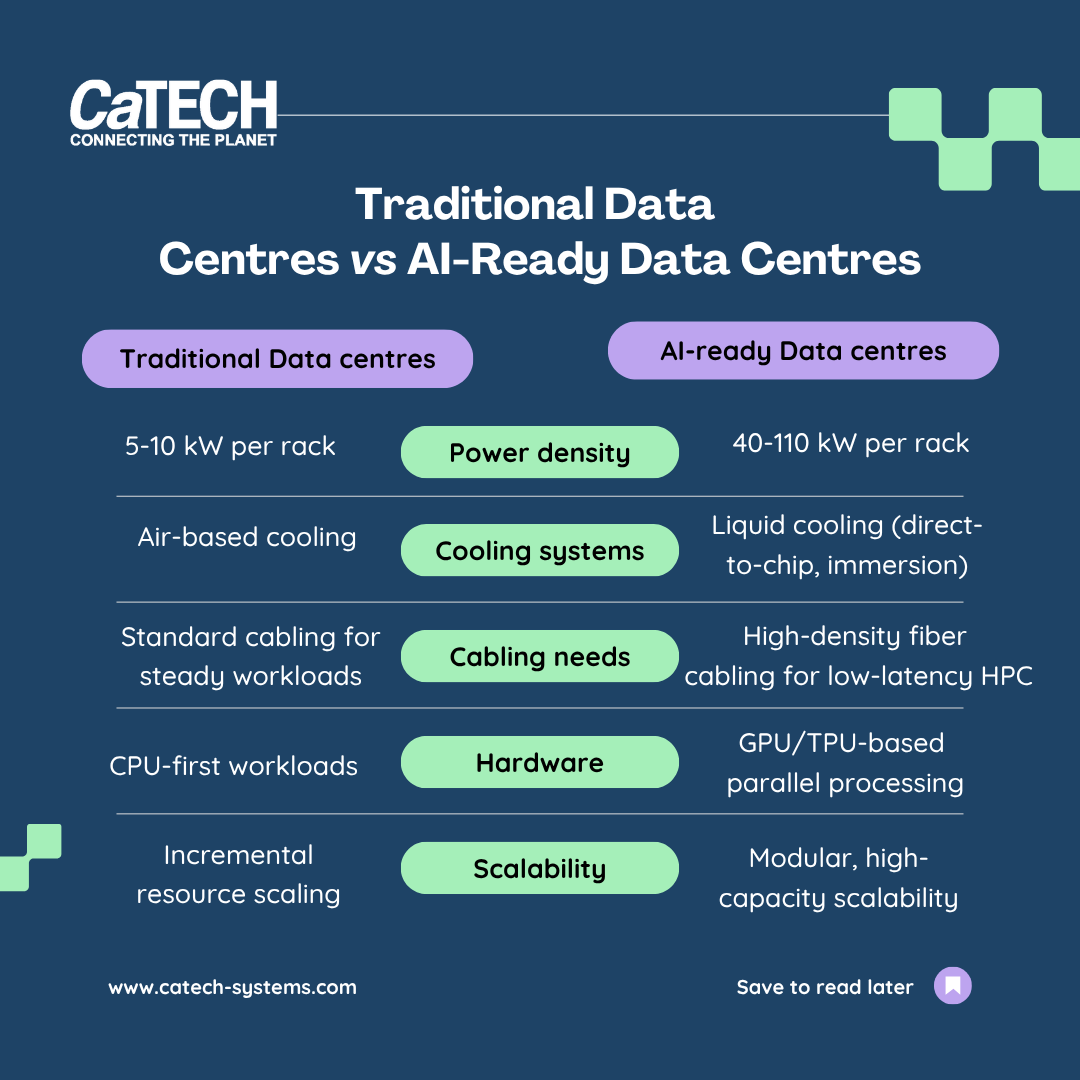

Key Differences: Traditional vs. AI Data Centers:

- Chip Requirements: AI workloads run on specialized chips like GPUs, TPUs, and NPUs, designed for extreme performance in optimizing AI algorithms. Traditional CPUs are general-purpose chips, performing many tasks at average levels.

- Power Supply: AI-driven data centers require significantly more power—up to 40-110 kW per rack, compared to 10-12 kW per rack in traditional data centers. Some systems even explore power solutions beyond 200 kW per rack. While AI hardware is more energy-efficient per GPU, it requires a significant increase in power density, affecting space and power use.

- Cooling Systems: Traditional air-based cooling is less efficient for AI workloads. AI data centers employ advanced cooling systems like liquid or hybrid cooling, which are more efficient in high-density environments and better equipped to manage the increased power usage and heat output. These systems are also better at optimizing water usage, though they lead to higher water consumption.

- Cabling Systems: AI servers require much higher cabling density (4-5 times more fiber connections) than traditional servers. A new cabling architecture is necessary to minimize latency and optimize performance.

- Design Changes: There is also a paradigm shift in data center design, making AI-ready infrastructure essential for businesses looking to stay competitive.

QUICK COMPARISON TABLE: TRADITIONAL DATA CENTRES VS AI-READY DATA CENTRES

The Future of Data Centers

As AI continues to drive innovation, we’ll see a rise in specialized AI data centers, pushing performance and efficiency to new heights.

However,

traditional data centers won’t disappear—many organizations are likely to adopt a hybrid approach, combining both traditional and AI-optimized infrastructure to meet diverse needs.

If you think the rise of AI is just a trend, then you are wrong — it is now leading a fundamental shift in how businesses operate.

From training large language models like ChatGPT to running real-time AI applications, these workloads demand infrastructure that traditional data centers cannot support.

As Canada’s leader in data center cabling solutions, CaTECH Systems is at the forefront of this evolution, helping businesses build the infrastructure needed to power the next-gen data centers.

We specialize in designing and implementing cabling infrastructure that ensures low latency, high bandwidth, and reliable performance for AI-driven environments.

RELATED LINKS: